42 soft labels deep learning

PDF Learning classification models with soft-label information Materials and methods Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/ numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia. A semi-supervised learning approach for soft labeled data | IEEE ... In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of membership of the training data to the given classes. Often only a small number of labeled data is available while unlabeled data is abundant. Therefore, it is important to make use of unlabeled data. In this paper we propose an approach for Fuzzy-Input ...

What is Label Smoothing? - Towards Data Science Label smoothing is a regularization technique that addresses both problems. Overconfidence and Calibration A classification model is calibrated if its predicted probabilities of outcomes reflect their accuracy. For example, consider 100 examples within our dataset, each with predicted probability 0.9 by our model.

Soft labels deep learning

Logistic Regression - Deep Learning Wizard We try to make learning deep learning, deep bayesian learning, and deep reinforcement learning math and code easier. Used by thousands. ... Where the labels are \(y_0 = 0\) and \(y_1 = 1\) Also, it's bolded because it's a vector, not a matrix. ... This is because soft(arg)max returns the probability distribution over K classes, a vector. ... Deep Learning for AI | July 2021 | Communications of the ACM In supervised learning, a label for one of N categories conveys, on average, at most log 2 (N) bits of information about the world.In model-free reinforcement learning, a reward similarly conveys only a few bits of information. In contrast, audio, images and video are high-bandwidth modalities that implicitly convey large amounts of information about the structure of the world. Label Smoothing: An ingredient of higher model accuracy Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1.

Soft labels deep learning. Deep Learning: Dealing with noisy labels | by Tarun B | Medium Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. In International Conference on Machine Learning [6] Malach, E. and Shalev-Shwartz, S. (2017). Learning Soft Labels via Meta Learning - researchgate.net The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to... Meta Soft Label Generation for Noisy Labels | IEEE Conference ... The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ... Deep Learning: Dealing with noisy labels Ensembles with soft labels: ... Z. Zhang and Y. Li, "Cross-Training Deep Neural Networks for Learning from Label Noise," 2019 IEEE International Conference on Image Processing (ICIP), ...

Rethinking Soft Labels for Knowledge ... - transfer-learning.ai February 1, 2021 Machine Learning Papers Leave a Comment on Rethinking Soft Labels for Knowledge Distillation A Bias Variance Tradeoff Perspective Knowledge distillation is an effective approach to leverage a well-trainednetwork or an ensemble of them to guide the training of a student network . Knowledge distillation in deep learning and its applications - PMC Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels. What is the difference between soft and hard labels? - reddit Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 6 More posts from the learnmachinelearning community 734 Posted by 5 days ago 2 Project Soft Labels Transfer with Discriminative Representations Learning for ... Because the accuracy of pseudo labels cannot be guaranteed explicitly. To address this issue, we propose a Soft Labels transfer with Discriminative Representations learning (SLDR) framework to jointly optimize the class-wise adaptation with soft target labels and learn the discriminative domain-invariant features in a unified model.

Building Custom Deep Learning Based OCR models Jun 15, 2021 · Deep learning approaches have improved over the last few years, reviving an interest in the OCR problem, where neural networks can be used to combine the tasks of localizing text in an image along with understanding what the text is. Using deep convolutional neural architectures and attention mechanisms and recurrent networks have gone a long ... Dynamic Auxiliary Soft Labels for decoupled learning The long-tailed distribution in the dataset is one of the major challenges of deep learning. Convolutional Neural Networks have poor performance in identifying classes with only a few samples. ... We use soft labels to improve the performance of the decoupled learning framework by proposing a Dynamic Auxiliary Soft Labels (DaSL) method ... Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability COLAM: Co-Learning of Deep Neural Networks and Soft Labels via ... In this work, we study the problem of learning optimal soft labels for training samples subject to the deep learning process, and further propose novel algorithm that intends to co-learn both "best" soft labels and deep neural networks through an end-to-end training procedure.

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

Deep learning in environmental remote sensing ... - ScienceDirect May 01, 2020 · Deep learning (DL), which has attracted broad attention in recent years, is a potential tool focusing on large-size and deep artificial neural networks. ... The labels' dimension k is the number of neurons in the output layer. Each neuron in the output layer is equal to the corresponding neuron of the summation layer divided by the first neuron ...

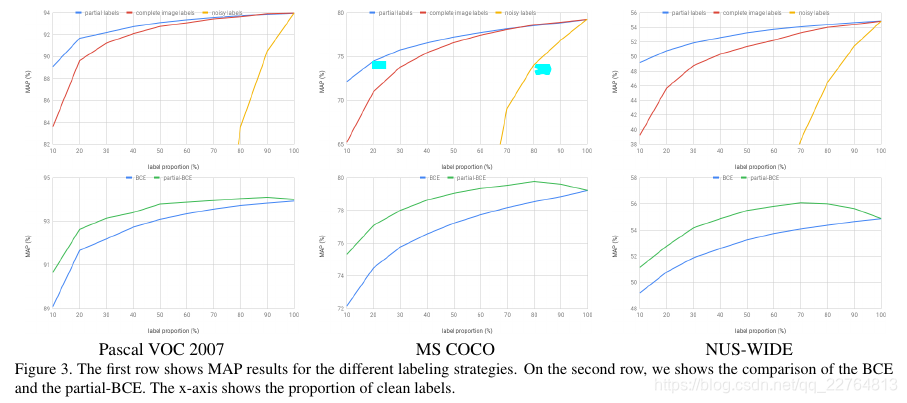

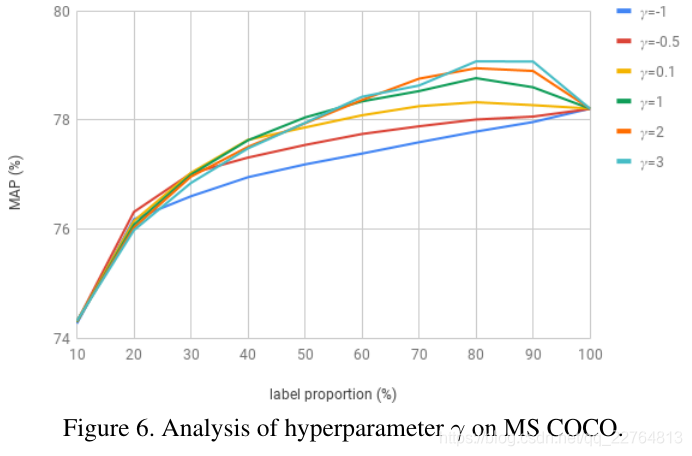

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

(PDF) Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning … Oct 29, 2017 · PDF | On Oct 29, 2017, Jeff Heaton published Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning: The MIT Press, 2016, 800 pp, ISBN: 0262035618 | Find, read and cite all the research ...

Soft-label Dataset Distillation for Deep Learning Soft-label Dataset Distillation for Deep Learning. Dataset distillation is a method for reducing dataset sizes by learning a small number of synthetic samples containing all the information of a large dataset. This has several benefits like speeding up model training, reducing energy consumption, and reducing required storage space.

An Overview of Multi-Task Learning for Deep Learning May 29, 2017 · So far, we have focused on theoretical motivations for MTL. To make the ideas of MTL more concrete, we will now look at the two most commonly used ways to perform multi-task learning in deep neural networks. In the context of Deep Learning, multi-task learning is typically done with either hard or soft parameter sharing of hidden layers.

Deep Learning: A Comprehensive Overview on Techniques, … Aug 18, 2021 · Deep learning (DL), a branch of machine learning (ML) and artificial intelligence (AI) is nowadays considered as a core technology of today’s Fourth Industrial Revolution (4IR or Industry 4.0). Due to its learning capabilities from data, DL technology originated from artificial neural network (ANN), has become a hot topic in the context of computing, and is widely …

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

COLAM: Co-Learning of Deep Neural Networks and Soft Labels via ... The key principle here to regularize the deep learning procedure with certain privileged prior information [ 15, 26] embedded in the soft labels. With a set of predefined rules, label smoothing [ 23] was first proposed to soften the hard labels to regularize the training objectives with smoothness.

Using Deep Learning for Image-Based Plant Disease Detection Sep 22, 2016 · Learning rate policy: Step (decreases by a factor of 10 every 30/3 epochs), Momentum: 0.9, Weight decay: 0.0005, Gamma: 0.1, Batch size: 24 (in case of GoogLeNet), 100 (in case of AlexNet). All the above experiments were conducted using our own fork of Caffe (Jia et al., 2014), which is a fast, open source framework for deep learning. The basic ...

[2007.05836] Meta Soft Label Generation for Noisy Labels generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal label distribution by checking gradient directions on both noisy training data and noise-free meta-data. In order to iteratively update

Understanding Deep Learning on Controlled Noisy Labels Posted by Lu Jiang, Senior Research Scientist and Weilong Yang, Senior Staff Software Engineer, Google Research. The success of deep neural networks depends on access to high-quality labeled training data, as the presence of label errors (label noise) in training data can greatly reduce the accuracy of models on clean test data. Unfortunately, large training datasets almost always contain ...

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base...

Python Deep Learning - Implementations - Tutorialspoint In this implementation of Deep learning, our objective is to predict the customer attrition or churning data for a certain bank - which customers are likely to leave this bank service. The Dataset used is relatively small and contains 10000 rows with 14 columns. We are using Anaconda distribution, and frameworks like Theano, TensorFlow and Keras.

Deep learning model to predict complex stress and strain fields in ... Apr 09, 2021 · In recent years, ML methods, especially deep learning (DL), have revolutionized our perspective of designing materials, modeling physical phenomena, and predicting properties (21–26).DL algorithms developed for computer vision and natural language processing can be used to segment biomedical images (), design de novo proteins (28–30), and generate …

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

Dynamic Auxiliary Soft Labels for decoupled learning 3.2.1. Representation learning stage. The Dynamic Auxiliary Soft Label model consists of three parts. In addition to the feature representation network R N and the classifier C under the decoupled learning framework, we have designed an auxiliary network A N for the two-stage training to generate auxiliary soft labels.

Unable to locate the downloaded datasets in Google Colab - Part 1 (2019) - Deep Learning Course ...

Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists.

Post a Comment for "42 soft labels deep learning"